Article In Press : Article / Volume 3, Issue 2

- Research Article | DOI:

- https://doi.org/10.58489/2836-2217/021

ChatGPT and Its Role in Outpatient Departments

1HITEC Institute of Medical Sciences, Taxila, Pakistan.

2CMH Lahore Medical and Dental College, Lahore, Pakistan.

3Muhammad Nawaz Shareef University of Agriculture, Multan

Dr. Ayesha Bukhari*

Ayesha Bukhari, et.al., (2024). ChatGPT and Its Role in Outpatient Departments. Clinical Case Reports and Trails. 3(2). DOI: 10.58489/2836-2217/021

© 2024 Ayesha Bukhari, this is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

- Received Date: 02-09-2024

- Accepted Date: 30-09-2024

- Published Date: 02-10-2024

ChatGPT, Outpatient Departments, Residents, Physicians.

Abstract

Background: ChatGPT is a language-based model built with a âsuper brain' containing a database with adequate medical knowledge used in diagnosis, investigations and management of diseases.

Objectives: This study aims to explore the possibility of using ChatGPT as an assistive tool in outpatient clinics.

Methods: This is a descriptive cross-sectional study. Our study targeted a population of 259 post graduate residents and consultants from reputable institutions of Punjab using convenient sampling techniques. The data collection tool was a structured questionnaire shared via google forms and distributed manually. It assessed physicians use of ChatGPT, perceived usefulness in management of patients and its role in prevention of disease and improvement of the healthcare system.

Results: The responses were taken on a 5-point scale, and consisted of a greater population of female physicians 138(53.3%) as compared to males 121 (46.7%).

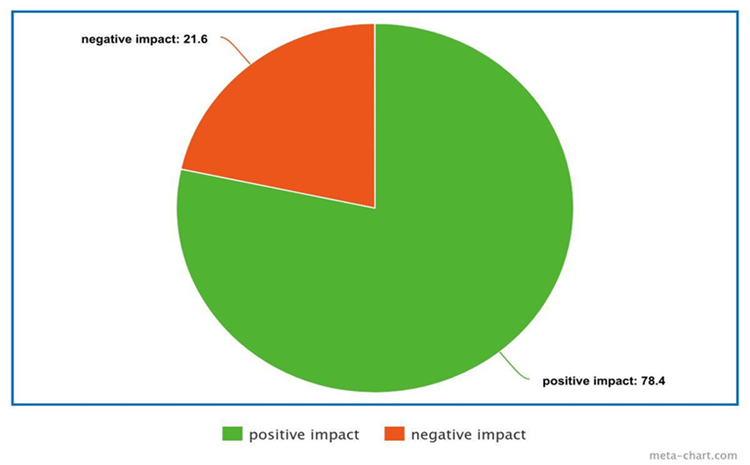

A whopping majority of physicians found ChatGPT to be efficacious in outpatient settings (59.1%).A significant percentage of people highlighted a decrease in burn out (61%), aid in correct diagnosis (62.5%) and adequate treatment plans laid out by ChatGPT (46.7%). The responses indicated 78.4% of the participants believed that ChatGPT has a positive impact in outpatient departments

Conclusion:

ChatGPT shows significant potential in serving as a cognitive assistant to physicians as we inculcate it into our healthcare system. It is set to make the outpatient experience much improved for both the physicians and the patients.

Introduction

Artificial intelligence (AI) is the up-and-coming technology that is making headlines with its imitation of a ‘super brain’; capable of storing, extracting, and actively learning, rewriting, and adapting according to the needs of the user [1]. ChatGPT is a recently launched chatbot [2]. With a large amount of clinical knowledge and a data bank containing almost all required medical information, large language models like ChatGPT will be the best assistive technology needed to ensure that physicians can provide the best healthcare experience for the patients [3]. It not only provides a massive bank of information but have the ability to adapt and learn with each user experience; providing good quality and error-free healthcare has become highly achievable [1,3,6].

In addition to providing essential medical information for the treatment of patients, ChatGPT has also helped provide a more patient-friendly language, summary to help physicians communicate more easily and effectively with patients and attendants [1]. ChatGPT is capable of analyzing new data input by identifying patterns and matching it with its knowledge reserve to generate specific answers that are relevant to the new data, making it an effective tool for assisting physicians with the diagnosis and management of almost all medical situations. It has also been proven to be beneficial in writing patient discharge summaries and clinical letters [7,8]. In addition there has been research done to gauge the capacity of LLM models such as ChatGPT in making clinical decisions independent of any external input based on only laboratory and radiological findings which has provided a deeper insight to the actual capabilities of this AI [1,4]. ChatGPT outshines other AI models in terms of. Its fluency in multiple languages, ability to create coherent and meaningful text, advanced understanding of text, enhanced communication abilities and potential to assist medical practitioners in analyzing data, medical records and symptoms. It can devise treatment plans and aid in patient diagnosis. Moreover, it can play an important role in mental health counseling and patient triage and can promote patient engagement while promoting adherence to treatment plans through timely reminders [13]. Despite its potential benefits ChatGPT presents some novel obstacles and approaches to education [11]. These include irrelevant references, copyright law violations, medical legal difficulties, inability to distinguish between credible and unreliable sources, and accuracy issues [9,12]. In addition, AI generates new information based on a specific set of previously learned inputs, Algorithms and probabilities could lead to a lack of empathy or focus on the financial/emotional needs of the patients [10].

As a result, it cannot operate as an autonomic unit but is more helpful in assisting medical professionals in providing efficient healthcare [5].

While there is no current literature available on the user experience of physicians using ChatGPT to assist in outpatient clinics, the digital age has brought about a new phase of healthcare requirements. This study aims to assess the physicians' reported efficiency of ChatGPT as a cognitive assistant in outpatient disease diagnosis and care and to address the gap in the literature regarding their user experience.

With the correct use, ChatGPT can help overcome the overall healthcare deficit created by the poor quality of patient-physician interaction making life easier for both parties involved.

Methods

This descriptive cross-sectional study was carried out at BMY Health, over a period of three months May to July 2023 after approval of the ethics review committee (ERC) protocol number BMY-ERC1-10-2023. Doctors of Punjab province were approached through social media using convenience sampling. The target population was licensed physicians who use ChatGPT at outpatient clinics and have internet availability. The representative sample size was calculated to be 259 using the sample size calculator OpenEpi [14]. The tool for data collection was a structured questionnaire made in English. It was shared via google forms as well as through hard copies among the participants to ensure the desired sample size is achieved. Questions were asked about age, gender, speciality, usage of ChatGPT in outpatient clinics and its impact on healthcare. Recommendations regarding ChatGPT were also asked. The data was analyzed using the software IBM SPSS (Statistical Package for the Social Sciences) Version 29.0. Descriptive statistics including frequency and percentage was for categorical variables. Mean, median and standard deviation was calculated for age and income level. Additionally statistical analysis such as Pearson’s test was also applied to find correlation between the variables. Information is kept anonymous and strictly used for research purposes.

Results

In one month a calculated sample size of 259 was achieved. The participants consisted of 138(53.3%) females and 121(46.7%) males. The age of the participants ranged from 24yrs to 67 yrs with a mean of 42.89yrs and a median of 42yrs. In terms of qualifications 85(32.8%) of the participants were post graduate residents and 174(67.2%) of the participants were consultants. The income ranged from 50,000 to 300,000 with a mean of 1,20000.

When asked about improvement of efficiency at outpatient clinics by using chatGPT 48(18.5%) respondents were sure, 131(50.6%) thought it was likely, 64(24.7%) thought it was not likely, 10(3.9%) thought it improves efficacy to some extent while 6(2.3%) it did not.

Table 1: ChatGPT usage improves efficiency in outpatient clinics

| Sure | Likely | Not Likely | To some extent | No |

|---|---|---|---|---|

| 18.5 % | 50.6 % | 24.7 % | 3.9 % | 2.3 % |

When asked about how chatGPT compares to human doctors 45(17.4%) participants thought that chatGPT understands medical terminology and jargon, 155(59.8%) participants thought it was likely, 10(7.3%) participants thought it was not likely. 39(15.1%) participants thought chatGPT understood medical terminology and jargon to some extent while 1(0.4%) thought it did not.

Regarding experience 2(0.8%) participants thought that chatGPT gives more personalized experience than human doctors, 108(41.7%) participants thought it was likely, 121(46.7%) participants thought it was not likely. 11(4.2%) participants thought that chatGPT gives a more personalized experience to some extent and 16(6.2%) thought it did not.

When asked about whether chatGPT caters the need of individual patients while prescribing (considering contraindications, common side effects, and other ongoing health conditions)

17(6.6%) participants thought it was likely, 41(15.8%) participants thought it was not likely, 9(3.5%) participants thought it caters to some extent while 192(74.1%) participants thought it did not.

When asked about the ability of chatGPT to provide emotional support during appointments 7(2.7%) participants thought it is likely, 147 (56.8%) participants thought it was not likely, 37(4.3%) participants thought it can provide emotional support some extent, while 68(26.3%) participants thought it cannot.

Regarding providing recommendations for lifestyle changes and other non-medical interventions 20(7.7%) participants thought that chatGPT provides it, 179(69.1%) participants thought it was likely, 26(10%) participants thought it was not likely, 26(10%) participants thought it provides recommendations to some extent while 8(3.1%) participants thought it did not.

When asked about whether chatGPT provides accurate medical advice 161(62.2%) participants thought it is likely that 49(18.9%) participants thought it was not likely, 23(8.9%) participants thought it can provide accurate medical advice to some extent, while 26(10%) participants thought it cannot.

When asked about whether chatGPT has the potential to replace human doctors in outpatient settings 3(1.2%) participants thought it is likel, 30(11.6%) participants thought it was not likely, 19(7.3%) participants thought it can replace to some extent, while 207(79.9%) participants thought it cannot.

Table 2a: ChatGPT in comparison to human doctors

Aspects of ChatGPT | Yes % | Likely % | Not likely % | To some extent % | No % |

Understands medical jargon | 17.4 | 59.8 | 7.3 | 15.1 | 0.4 |

Personalized experience | 0.8 | 41.7 | 46.7 | 4.2 | 6.2 |

Lifestyle recommendations and counseling regarding non-medical interventions | 7.7 | 69.1 | 10 | 10 | 3.1 |

Table 2b: ChatGPT in comparison to human doctors

Aspects of ChatGPT | Likely % | Not likely % | To some extent % | No % |

Caters to individual needs when prescribing medicine | 6.6 | 15.8 | 3.5 | 74.1 |

Emotional and empathetic approach during appointments | 2.7 | 56.8 | 4.3 | 26.3 |

Accurate medical advice | 62.2 | 18.9 | 8.9 | 10 |

Potential to replace human doctor | 1.2 | 11.6 | 7.3 | 79.9 |

When asked about ChatGPT impact on physician burnout in outpatient setting 158(61%) participants thought it is likely, 33(12.7%) participants thought it was not likely, 17(6.6%) participants thought it reduces to some extent while 51(19.7%) participants thought it cannot.

Regarding the possibility of reduction of healthcare costs in the long run 18(6.9%) participants thought that it can,145(56%) participants thought it was likely. 32(12.4%) thought it was not likely while 19(7.3%) thought that ChatGPT would reduce it to some extent. 45(17.4%) thought it could not.

Table 3: Impact of ChatGPT in physician burnout

Impact of ChatGPT | Likely | Not likely | Some extent | No |

Reducing physician burn out | 61% | 12.7% | 6.6% | 19.7% |

Table 4: ChatGPT to reduce healthcare costs

Impact of ChatGPT | Yes | Likely | Not likely | To Some extent | No |

Reducing healthcare costs | 6.9% | 56% | 12.4% | 7.3% | 17.4% |

When asked about chatGPT ability to make a correct diagnosis 17(6.6%) participants thought that it diagnoses patients with 90 percent accuracy, 80(30.9%) thought it diagnoses with 80 percent accuracy while 162(62.5%) thought it is 70 percent accurate in diagnosing patients.

Regarding recommending accurate investigations 184(71%) participants thought that chatGPT investigates patients with 90 percent accuracy, 62(23.9%) thought it investigates with 80 percent accuracy while 13(5%) thought it is 70 percent accurate in investigating patients.

When asked about whether chatGPT can devise accurate treatments 6(2.3%) participants thought that it treats patients with 90 percent accuracy, 121(46.7%) thought it treats with 80 percent accuracy while 132(51%) thought it is 70 percent accurate in treating patients.

Table 5: Accuracy of results

| Feature | 90% | 80% | 70% |

| Diagnoses | 6.6 | 30.9 | 62.5 |

| Investigations | 71 | 23.9 | 5 |

| Treatments | 2.3 | 46.7 | 51 |

Regarding the ethical aspect of chatGPT usage 1(0.4%) thought it is ethical to solely use chatGPT for diagnosis, investigations and treatment,18(6.9) thought its not likely,18(6.9%) it is ethical to some extent while 222(85.7%) participants thought it is not.

Table 6: Ethical parameters for sole use of ChatGPT in patient management

| Ethical | 0.4% |

| Not likely | 6.9% |

| Ethical to some extent | 85.7% |

Regarding impact of ChatGPT usage in outpatient departments 203(78.4%) participants thought that it had a positive impact in outpatient departments while 56(21.6%) participants thought it had a negative impact.

When asked about recommending ChatGPT usage to fellow doctors 5(1.9%) participants will recommend use of ChatGPT 132(51%) thought it is likely, and 122(47.1%) thought it is not likely.

Fig 1: Impact of ChatGPT use in outpatient clinics

Table 7: Further recommendation of ChatGPT to other doctors

| Yes | Likely | Not likely |

| 1.9% | 51% | 47.1% |

Regarding suggestions for chatGPT usage in outpatient departments 93.8% thought it should have accurate medical knowledge, 27.8% thought it should use empathetic sentences, 57.9% thought it should tell about importance of prevention of disease, 74.1% thought it should correlate with other ongoing medical conditions and 71.4% thought it should be time efficient.

Table 8:

| Suggestions for improving ChatGPT for use in outpatient clinics | % recommended |

| Accurate medical knowledge | 93.8 |

| Empathetic sentences | 27.8 |

| Reiterate importance of prevention of disease | 57.9 |

| Correlate with other medical conditions | 74.1 |

| Time efficient | 71.4 |

With increasing age physicians thought that ChatGPT improves efficacy at outpatient departments and this correlation turned out to be significant.

Also, with increasing age physicians thought that ChatGPT will reduce the burnout of Doctors in outpatient Departments and this correlation turned out to be significant.

Physicians with higher education thought that ChatGPT improves efficacy at outpatient departments and this correlation turned out to be significant.

Discussion

ChatGPT, a potential AI-developed language model, has recently emerged and excels across various fields including medicine and scientific writing necessitating further exploration.15

The study examined the perceptions of physicians regarding the use of ChatGPT in outpatient clinics. The results indicated that a significant percentage of participants were positive about the potential benefits of ChatGPT implementation. Notably, ChatGPT will improve efficacy at outpatient clinics; 48(18.5%) respondents were sure, 131(50.6%) thought it was likely. Majority of participants believed that ChatGPT understands medical terminology and jargon; 45(17.4%) were sure 155(59.8%) participants thought it was likely. Moreover, most of the participants were optimistic that ChatGPT could provide accurate medical advice; 161(62.2%) participants thought it was likely.

However, there were also reservations among participants. For instance, the majority did not think it would give a more personalized experience than human doctors; only 2(0.8%) were sure that it can. However, another article suggests that Chat GPT can provide personalized recommendations on topics, such as nutrition plans, exercise programs, and psychological support.19 Another reservation was regarding ChatGPT ability to provide emotional support; (56.8%) participants thought it was not likely to provide emotional support, while 68(26.3%) participants thought it cannot. Most of the respondents did not think that ChatGPT can replace human doctors in outpatient departments; 207(79.9%). Another study showed that a significant (76.4%) of medical students and 67.7% of doctors either strongly disagreed or disagreed with the notion that AI would replace physicians in the future [16]. In a separate study, 61.86% of respondents expressed concerns about the possibility of reduced human interactions as AI usage 5 continues to grow in the future [17].

Additionally, only a few of the participants believed that ChatGPT caters to individual patient needs while prescribing patients (considering contraindications, side effects, and other health conditions); 192(74.1%). Another study revealed that 56.5% of the respondents thought it was “Extremely unlikely” that AI can formulate personalized treatment plans for patients [20]. Most importantly 85.7% of the participants did not think it is ethical to solely use ChatGPT to diagnose, investigate or treat patients. Urgent action is required to make standard operating procedures and recommendations to ensure the secure utilization of ChatGPT, with a focus on prioritizing its safe and responsible usage [15].

Furthermore, while 78.4% of participants perceived a positive impact of ChatGPT in outpatient departments, 21.6% thought otherwise. It is worth noting that 47.1% of participants were unwilling to recommend its use to fellow doctors.

Most of the participants believed that ChatGPT is 70

Conclusion

In conclusion, while there is significant potential for ChatGPT to enhance outpatient clinics' efficiency and help physicians as a cognitive assistant, there are concerns and considerations that need to be addressed for its successful implementation. Further research and development are essential to capitalize on the benefits of ChatGPT while addressing potential challenges in the healthcare setting.

Conflict of Interest: The authors declare no conflict of interest.

Funding Source: None

Ethical Approval: Given

Authors' Contribution: All the authors contributed equally in accordance with ICMJE guidelines

References

- Cascella, M., Montomoli, J., Bellini, V., & Bignami, E. (2023). Evaluating the feasibility of ChatGPT in healthcare: an analysis of multiple clinical and research scenarios. Journal of medical systems, 47(1), 33.

- Lo, C. K. (2023). What is the impact of ChatGPT on education? A rapid review of the literature. Education Sciences, 13(4), 410.

- Johnson, D., Goodman, R., Patrinely, J., Stone, C., Zimmerman, E., Donald, R., ... & Wheless, L. (2023). Assessing the accuracy and reliability of AI-generated medical responses: an evaluation of the Chat-GPT model. Research square.

- Rao, A., Kim, J., Kamineni, M., Pang, M., Lie, W., & Succi, M. D. (2023). Evaluating ChatGPT as an adjunct for radiologic decision-making. MedRxiv, 2023-02.

- Mello, M. M., & Guha, N. (2023, May). ChatGPT and physicians’ malpractice risk. In JAMA Health Forum (Vol. 4, No. 5, pp. e231938-e231938). American Medical Association.

- Sonntagbauer, M., Haar, M., & Kluge, S. (2023). Künstliche Intelligenz: Wie werden ChatGPT und andere KI-Anwendungen unseren ärztlichen Alltag verändern?. Medizinische Klinik-Intensivmedizin und Notfallmedizin, 118(5), 366-371.

- Patel, S. B., & Lam, K. (2023). ChatGPT: the future of discharge summaries?. The Lancet Digital Health, 5(3), e107-e108.

- Ali, S. R., Dobbs, T. D., Hutchings, H. A., & Whitaker, I. S. (2023). Using ChatGPT to write patient clinic letters. The Lancet Digital Health, 5(4), e179-e181.

- Dave, T., Athaluri, S. A., & Singh, S. (2023). ChatGPT in medicine: an overview of its applications, advantages, limitations, future prospects, and ethical considerations. Frontiers in artificial intelligence, 6, 1169595.

- Meskó, B., Hetényi, G., & Győrffy, Z. (2018). Will artificial intelligence solve the human resource crisis in healthcare?. BMC health services research, 18, 1-4.

- Shoufan, A. (2023). Exploring students’ perceptions of ChatGPT: Thematic analysis and follow-up survey. IEEE Access, 11, 38805-38818.

- Shen, Y., Heacock, L., Elias, J., Hentel, K. D., Reig, B., Shih, G., & Moy, L. (2023). ChatGPT and other large language models are double-edged swords. Radiology, 307(2), e230163.

- Ray, P. P. (2023). ChatGPT: A comprehensive review on background, applications, key challenges, bias, ethics, limitations and future scope. Internet of Things and Cyber-Physical Systems, 3, 121-154.

- Ayers, J. W., Poliak, A., Dredze, M., Leas, E. C., Zhu, Z., Kelley, J. B., ... & Smith, D. M. (2023). Comparing physician and artificial intelligence chatbot responses to patient questions posted to a public social media forum. JAMA internal medicine, 183(6), 589-596.

- Sallam, M. (2023, March). ChatGPT utility in healthcare education, research, and practice: systematic review on the promising perspectives and valid concerns. In Healthcare (Vol. 11, No. 6, p. 887). MDPI.

- Kansal, R., Bawa, A., Bansal, A., Trehan, S., Goyal, K., Goyal, N., & Malhotra, K. (2022). Differences in knowledge and perspectives on the usage of artificial intelligence among doctors and medical students of a developing country: a cross-sectional study. Cureus, 14(1).

- Bisdas, S., Topriceanu, C. C., Zakrzewska, Z., Irimia, A. V., Shakallis, L., Subhash, J., ... & Ebrahim, E. H. (2021). Artificial intelligence in medicine: a multinational multi-center survey on the medical and dental students' perception. Frontiers in Public Health, 9, 795284.

- Shrestha, N., Shen, Z., Zaidat, B., Duey, A. H., Tang, J. E., Ahmed, W., ... & Cho, S. K. (2024). Performance of ChatGPT on NASS clinical guidelines for the diagnosis and treatment of low back pain: a comparison study. Spine, 49(9), 640-651.

- Arslan, S. (2023). Exploring the potential of Chat GPT in personalized obesity treatment. Annals of biomedical engineering, 51(9), 1887-1888.

- Jha, N., Shankar, P. R., Al-Betar, M. A., Mukhia, R., Hada, K., & Palaian, S. (2022). Undergraduate medical students’ and interns’ knowledge and perception of artificial intelligence in medicine. Advances in Medical Education and Practice, 13, 927.